A 3D driving game where the player controls the steering with camera-tracked arm movements.

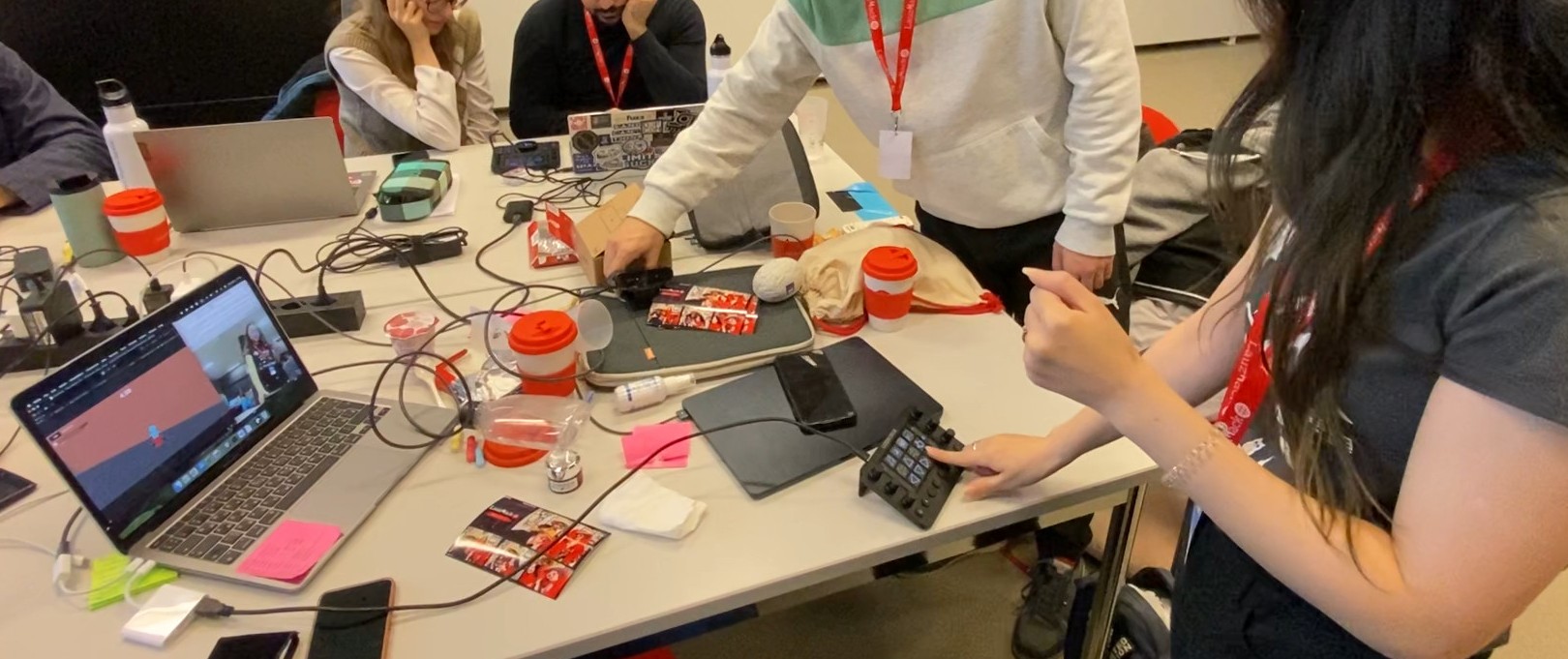

The project was developed in 24 hours during the LauzHack hackathon in Lausanne, Switzerland, where we finished in global 2nd place.

Core idea

Most driving simulators / games, use a specialized physical steering wheel controler to get the user input, because that is much more immersive than a simpler keyboard or joystick. However, not many people have such devices readily available. We address these limitations with a system that uses pose-tracking to determine the user’s arms position and use that as input. Players can then steer an imaginary wheel to control the virtual avatar.

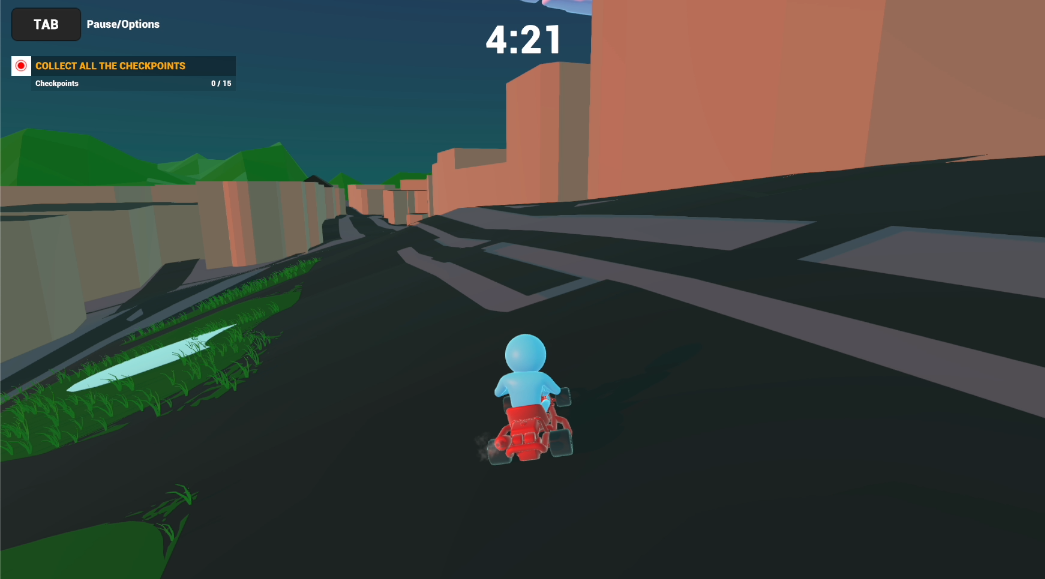

Gameplay

The user drives around a virtual replica of the city center of Lausanne. Movement is unconstrained within the scene limits.

For demo purposes, we designed a short circuit around the main parts of the city. There are several rings that represent checkpoints; the user needs to go through all of them, in order, before time runs out.

Note that the gameplay system is very limited, since we did not have time to develop it further and designed it specifically for the short hackathon demo.

Pose tracking

The pose tracking is done in a separate Python script, allowing it to run in parallel to the main Unity project. We use OpenCV and MediaPipe to detect the pose, then compare the angle between the wrists to determine the steering angle, that will be used to steer the car.

Note that the accuracy of the tracking is very dependent on the environment. From our experiments, we determined that a camera shot where the user is centered and visible from head to hips, facing the camera and with no other people in the frame, works the best. However, the system is quite robust and it will work for many suboptimal scenarios.

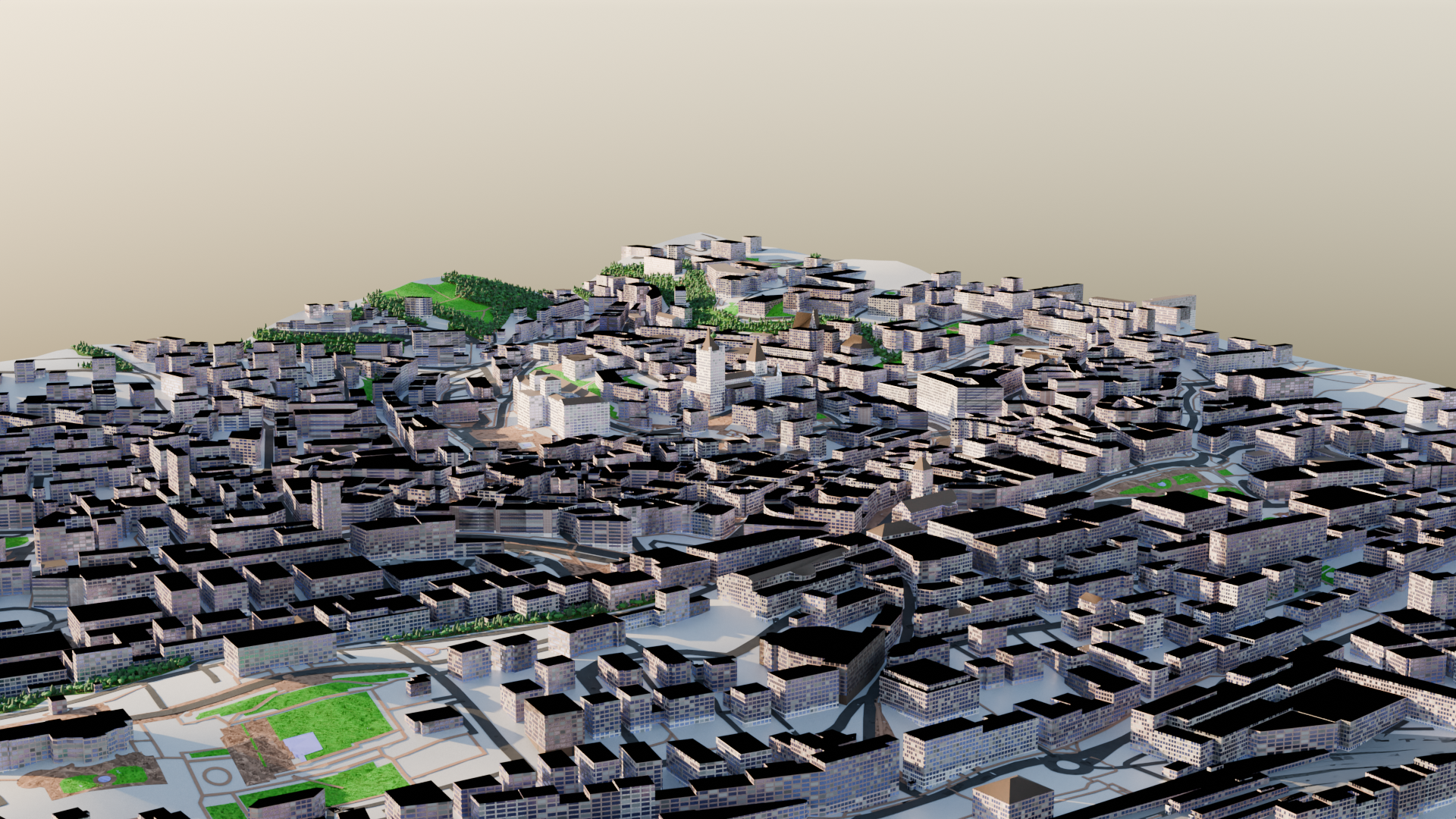

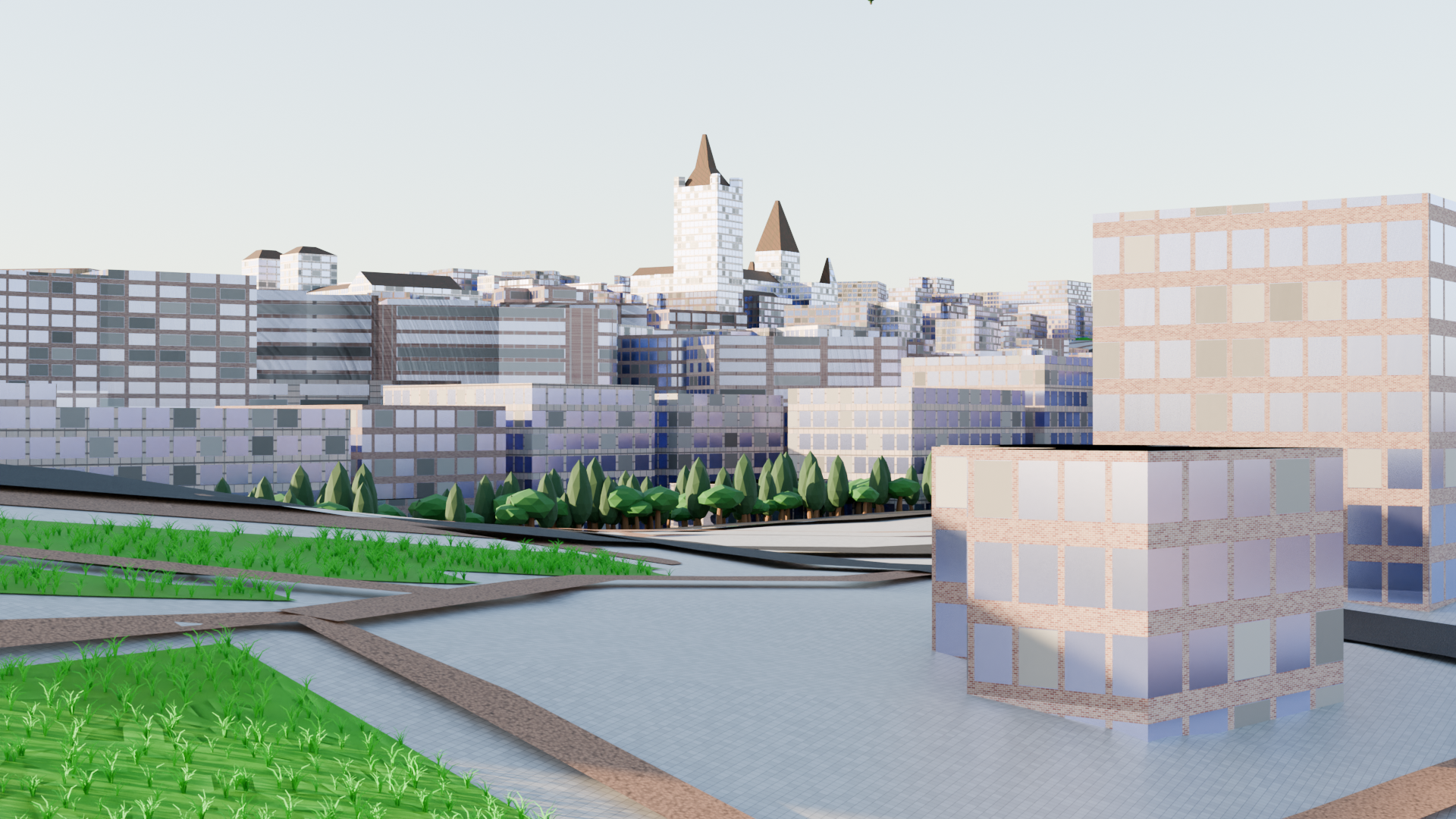

3D world

This is the part of the project that I developed.

In order to obtain a fast, and roughly accurate 3D model of the real city, we used Blender’s Blosm plugin to get elevation and building data. In some cases, we had to manually adjust some parts of the scene to get a visually pleasing result.

We used custom procedural materials built with Blender’s node system to generate textures (walls, roofs, asphalt, sidewalks, ground, vegetation, etc.). We also modeled some low-poly vegatation assets and used particle systems to populate the scene.

Note that we were unable to properly bake all the procedural textures to be exported into Unity. Thus, the final Unity project only uses plain materials without textures.

Finally, we created some custom building assets for the most iconic city landmarks (e.g. the cathedral).

Command board

Our project optionally uses a tactile control panel to control the car motion (speed, reverse), set scene parameters (weather, background music) or trigger computer utilities (screenshot). We also planned other features, such as turning on/off headlights or visualizing the virtual driver’s status, but we did not have time to implement it in the final game.

Note that this is optional, and all parameters can be tweaked with a regular keyboard. However, we think that it makes the game more immersive and also allows the user to play at some distance from the webcam (if using a laptop’s camera, but note that external cameras are also supported), that makes traking more precise.